In a significant move to protect children online, the UK government is proposing new legislation that would empower watchdogs to tackle the creation of AI-generated child sexual abuse material directly at its source.

Empowering Watchdogs to Test AI Models

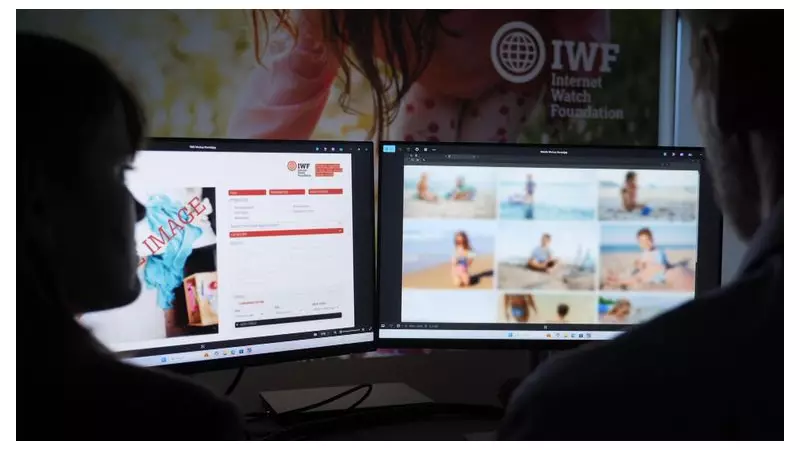

The planned amendment to the Crime and Policing Bill, tabled on Wednesday 12 November 2025, would grant organisations like the Internet Watch Foundation (IWF) and AI developers themselves the legal authority to test AI models. This testing would ensure these systems cannot generate illegal child sexual abuse material without the organisations breaking the law themselves.

Kerry Smith, chief executive of the IWF, emphasised that this proactive approach allows them to "tackle the problem at the source", rather than waiting for harmful content to appear online before taking action. The IWF, which currently works to remove hundreds of thousands of child abuse images from the internet every year, has hailed the proposal as a "vital step" to guarantee AI products are safe prior to their public release.

A Growing Crisis of AI-Generated Abuse

The legislative push comes alongside alarming new data from the IWF, revealing that reports of AI-generated child sexual abuse material have more than doubled in the past year. The severity of this content is also intensifying.

The most serious Category A material, which includes images of penetrative sexual activity, has risen from 2,621 to 3,086 items. This category now accounts for 56% of all illegal material identified by the foundation, a sharp increase from 41% the previous year. The data also shows that girls are disproportionately targeted, featuring in 94% of all illegal AI images detected in 2025.

Mandatory Safeguards and Broader Protections

While the government's move has been welcomed, children's charities are urging for the measures to go further. The NSPCC has called for this kind of safety testing to be made compulsory for all AI companies.

Rani Govender, policy manager for child safety online at the NSPCC, stated that while it is encouraging to see the AI industry being pushed to take greater responsibility, "to make a real difference for children, this cannot be optional." The charity insists the government must impose a mandatory duty on developers to embed safeguarding against child sexual abuse as a core component of product design.

Beyond child abuse material, the new rules would also mean that AI models can be checked to prevent the generation of extreme pornography or non-consensual intimate images. The government has stated it will convene a group of experts to ensure all testing is conducted safely and securely.

Technology Secretary Liz Kendall affirmed the government's commitment, saying, "These new laws will ensure AI systems can be made safe at the source", preventing vulnerabilities that could put children at risk. She added that this empowers trusted organisations to scrutinise AI models, ensuring child safety is designed in from the start, rather than being an afterthought.