The Dark World of 'The Com': How Children Are Groomed Online Through Gamified Abuse

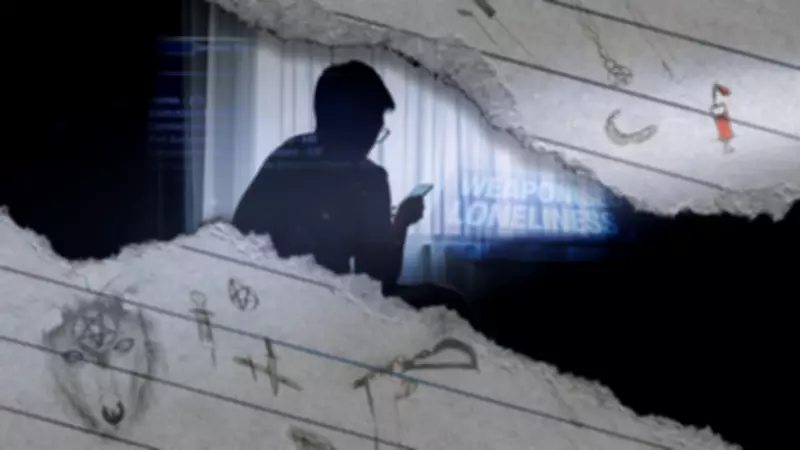

In a disturbing new trend, children are being drawn into sinister online networks where harmful content is exchanged not for money, but for social status. Known as "The Com," these groups operate across platforms like Discord and Telegram, exploiting vulnerabilities and grooming young victims through a gradual escalation of abuse.

A Mother's Heartbreaking Discovery

Rachel, a mother whose name has been changed to protect her family, tearfully recounts finding her teenage son's sketchbook filled with troubling drawings. The pages depicted knives, blood-filled syringes, and dismembered bodies, alongside phrases like "I am a sociopath" and "I just want to be loved." She had given him the sketchbook to help express his thoughts, but it instead revealed his descent into a dark online world that began when he was just 13.

Her son, who is neurodivergent, started innocently playing games like Roblox before moving to Discord to share gaming tips. Rachel initially felt he was safe, but his behaviour slowly changed, showing moodiness and withdrawal typical of adolescence. When his phone was confiscated at school, she and her husband discovered hidden razor blades and knives in his room. Upon accessing his phone, they found hundreds of memes with pastel, cartoonish appearances that concealed violent and self-harm imagery, captioned with messages like "Make me bleed and tell me how pretty I look."

Rachel realised her son had been exposed to a grooming "playbook" by The Com, where content escalated from mental health language to anorexia-style material, self-harm, and sexualised content over ten months. He was communicating with individuals from places like Croatia, highlighting the global nature of this threat. Now, with his smartphone replaced by a flip phone and strict monitoring, he is in a "great space" but remains unable to discuss the trauma, correcting his mother by saying, "I wasn't in a cult. I was being groomed."

The Global Threat of Gamified Harm

A new report by intelligence risk firm Resolver, shared exclusively with Sky News in partnership with the Molly Rose Foundation, describes The Com as an emerging global threat. Groups within this network, which constantly evolve, are linked to sadistic behaviour, extremist activity, and financial crime. Children and young adults are both victims and perpetrators, with harm being "gamified" to earn status points in what is termed an "ecosystem" of abuse.

Victims are specifically targeted for vulnerabilities such as neurodiversity or LGBTQ+ identities, with fake support groups used as bait. The report notes that few online spaces are entirely unaffected, and members drift between groups, making detection challenging. While early manifestations were in Europe and the US, Com activity now spans five continents, exploiting mental health issues, eating disorders, and self-harm struggles through forums, games, and messaging platforms.

How The Com Operates and Recruits

Sky News has identified over 90 group chats and channels related to The Com on Telegram, along with several Discord servers. Despite increasing attention, members continue to engage in criminal behaviours discussed in publicly accessible spaces. Some groups focus on cybercrime, selling stolen streaming accounts and personal details, while others advertise "swatting services"—fake threats to emergency services that provoke armed police responses. A price list viewed by Sky News offered a swatting call to a UK school for as little as $20 (£14.50), with videos showing members watching these incidents live on Discord as a spectator sport.

Other groups encourage vandalism and arson as prerequisites for membership, with one active in January linking to a news report on a church burning. The Com is most notorious for sexual exploitation and manipulating victims into self-harm and suicide. Sky News found evidence that some groups still require users to force victims to ritually self-harm for membership, with conversations revealing that victimising people confers status. In one exchange, users praised an acquaintance for making a girl self-harm, while another denigrated someone for only targeting girls with mental illness, boasting of exploiting victims with no prior self-harm history.

A Former Member's Regret and Parental Warnings

Sally, another mother whose name has been changed, shares her son's experience as a former Com member arrested for encouraging suicide. Described as an animal lover with a good sense of humour, he developed a "hideous" online alias while feeling isolated due to bullying. The Com flattered him, giving him tasks and a sense of belonging after he stumbled upon the network while gaming. Sally recalls him saying, "people keep getting me to do things I don't want to do," a statement that still haunts her. She urges parents to avoid "sleepwalking" into this nightmare, advising them to monitor their children's online activities closely and not assume safety just because they are at home.

Calls for Stronger Action and Regulatory Response

The Molly Rose Foundation criticises Ofcom's response as "appalling," accusing the regulator of being "asleep at the wheel." Chief executive Andy Burrows warns that the Online Safety Act is "too timid" to handle the complex mix of harms posed by The Com. The charity calls for self-harm and suicide to be treated as a national policing priority, similar to child sexual abuse. Oliver Griffiths, Ofcom's online safety group director, asserts that the regulator takes child protection seriously, with a dedicated team engaging platforms on Com groups. Jess Phillips MP, minister for safeguarding, pledges to use every power to shut down these networks, noting that Home Office-funded online officers safeguarded 1,748 children from abuse last year.

A Telegram spokesman states that the platform continually removes Com-associated groups, with terms of service forbidding acts like doxxing and calls for self-harm, and AI tools proactively monitoring for harmful content. Sky News has contacted Roblox and Discord for comment. For support, anyone feeling distressed can call Samaritans at 116 123 or email jo@samaritans.org.