WARNING: This article contains distressing material including references to suicide, self-harm and sexual abuse.

Exclusive Investigation Reveals Global Threat of 'Com' Network

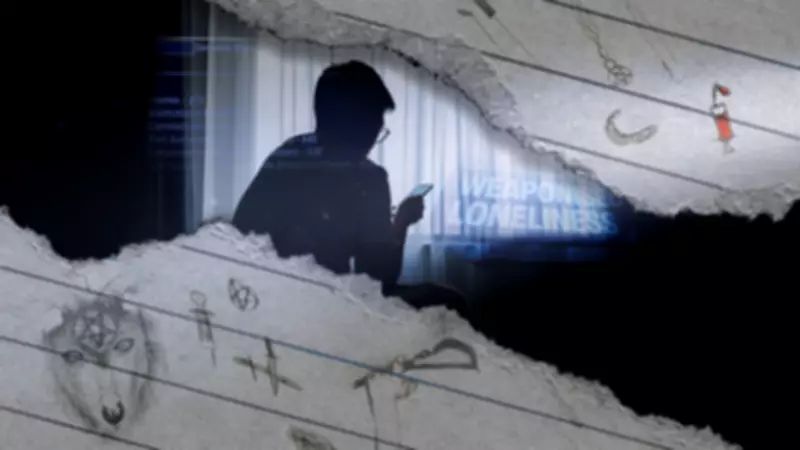

A mother's voice trembles as she turns the pages of her teenage son's sketchbook. "I'm so sad to think about things that he's seen and heard that are so horrendous," Rachel tells Sky News, her identity protected for safety reasons. The drawings inside are child-like yet deeply disturbing - knives, blood-filled syringes, upside-down crosses, and dismembered bodies. Phrases like "I am a sociopath", "I am god" and "I just want to be loved" accompany images of blades.

A Window Into a Dark Digital World

Rachel had given her son the sketchbook to help him express his thoughts, never imagining it would reveal his involvement with what intelligence experts call the Com - a loose network of online sadistic groups predominantly involving children and young adults. This network, detailed in an exclusive report shared with Sky News by intelligence risk firm Resolver in partnership with the Molly Rose Foundation, operates on a system where self-harm and child sexual abuse material serve as "social currency" rather than being traded for money.

For Rachel's son, the descent began innocently enough through gaming. Starting with Roblox offline at eight or nine years old, he progressed to online play with distant friends, eventually discovering Discord for sharing gaming tips. "I always felt like he was safe," Rachel recalls, unaware of the transformation occurring beneath the surface.

The Disturbing Discovery

When school confiscated his phone, Rachel and her husband searched his room, uncovering hidden razor blades, pocket knives, and kitchen knives concealed in small boxes. Accessing his phone revealed hundreds of seemingly innocent pastel memes that, upon closer inspection, contained disturbing imagery - smeared black eyes, blood from sockets, and bloodied skin with captions like "I don't matter" and "Make me bleed and tell me how pretty I look."

Rachel realised her neurodivergent son, seeking belonging, had been systematically exposed to mental health language, anorexia-style content, self-harm material, and sexualised content over ten months of escalation. One of his communicators was from Croatia, highlighting the network's global reach.

Parental Intervention and Ongoing Trauma

The family removed all digital devices, replaced his smartphone with an internet-free flip phone, restricted gaming to school friends only, and moved his bedroom closer to theirs. While Rachel reports her son is now in "a great space," he remains unable to discuss his experiences, once correcting her: "I wasn't in a cult. I was being groomed."

Rachel's guilt persists. "I allowed his world view, during this really critical period between 10 and 13, to be developed meme by meme, line by line, text by text," she confesses, fearing she hasn't seen the full extent of what he witnessed.

Resolver Report Details Systematic Exploitation

The new Resolver report describes an emerging global threat where Com groups exploit vulnerabilities through what they term the sadism Com and terror Com, "gamifying" harm and using abuse to gain notoriety within their ecosystem. Victims are targeted through online subcultures, with few digital spaces remaining unaffected.

How the Network Operates

At its core, the Com operates a system where violence and exploitation earn status points, with "crime as a service" openly advertised. Children and young people serve as both victims and, in some cases, perpetrators. The network specifically targets vulnerabilities, creating fake support groups for neurodiverse and LGBTQ+ communities while exploiting mental health issues, eating disorders, and self-harm struggles through forums, games, and messaging platforms.

Resolver's investigation reveals these groups lack singular ideology, with far-right imagery, occult symbolism, and extremist language often being performative. Members drift between groups, complicating detection and disruption efforts. While initially appearing in Europe and the US, Com activity now spans five continents.

Sky News Investigation Uncovers Criminal Activities

Sky News has identified more than 90 Com-related group chats and channels on Telegram, along with several Discord servers. Despite increasing attention, members continue engaging in criminal behaviours discussed in publicly accessible online spaces.

Key findings include:

- Cybercrime operations offering stolen streaming accounts and personal details for sale

- "Swatting services" where criminals make fake emergency calls, with UK school swatting offered for as little as $20 (£14.50)

- Encouragement of vandalism and arson as membership prerequisites

- Continued focus on sexual exploitation and manipulation of victims into self-harm and suicide

In one disturbing exchange, Telegram users praised an acquaintance for making a girl self-harm, while another denigrated someone for only targeting girls with existing mental health issues, boasting that exploiting those without prior self-harm history was more impressive.

Another Mother's Warning

"Don't assume they're safe in their room," advises Sally, whose son became a Com member and was arrested for encouraging suicide. Describing her son as an animal lover and keen angler with a good sense of humour, Sally reveals how bullying left him isolated with low self-esteem, making him vulnerable to Com recruitment.

"The Com flattered him, gave him jobs - made him feel part of something," she explains. His haunting admission - "people keep getting me to do things I don't want to do" - continues to trouble her. Sally urges parents to "keep an ear outside the room" and "get a sense for what's going on," warning: "Do not assume that because your child is at home in their room that they are safe."

Regulatory Response and Criticism

The Molly Rose Foundation has criticised Ofcom's response as "appalling," accusing the regulator of being "asleep at the wheel." Chief executive Andy Burrows warns the Online Safety Act is "too timid" to handle the complex mix of harms, calling for self-harm and suicide to be treated as a national policing priority alongside child sexual abuse and violence against women and children.

Oliver Griffiths, Ofcom's online safety group director, responded: "Nobody should be in any doubt about how seriously at Ofcom we are taking the protection of children." He confirmed dedicated teams are engaging with major platforms and addressing "small but risky services."

The National Crime Agency reported "a significant rise in teenage boys joining online communities that only exist to engage in criminality and cause harm." Jess Phillips MP, minister for safeguarding, pledged the government will "use every power we have to hunt down the perpetrators, shut these disgusting networks down, and protect every child at risk," noting Home Office-funded online officers safeguarded 1,748 children from sexual abuse last year.

A Telegram spokesman stated the platform "has continually removed groups associated with the Com since they were first identified," with moderators using AI tools to remove millions of harmful content pieces daily. Sky News has contacted Roblox and Discord for comment.

Anyone feeling emotionally distressed or suicidal can call Samaritans for help on 116 123 or email jo@samaritans.org.