YouTube's AI Moderation Sparks Creator Outcry Over Mass Channel Bans

YouTube's reliance on artificial intelligence for content moderation is facing intense scrutiny after numerous creators reported their channels being terminated erroneously, with appeals rejected within minutes. This wave of automated bans has left many content creators locked out of their earnings and forced to seek legal recourse, highlighting growing tensions between the platform's scale and its human oversight.

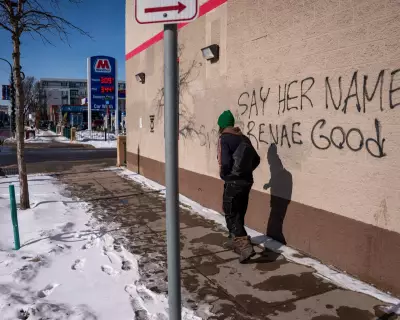

The Human Cost of Automated Decisions

Oleksandr Murashevych, a 35-year-old living in Poland, never anticipated that his automotive YouTube channel would lead to courtroom battles. Within two years, his channel Chase Car had grown to 20,000 subscribers, generating $2,000 monthly through regular content. However, in 2024, YouTube demonetised his channel in error, forcing him to disclose extensive channel details to restore it. Despite his compliance, YouTube terminated the channel four months later, labelling it as spam—a decision Oleksandr attributes to flawed AI moderation systems.

'The appeal process was a nightmare,' Oleksandr tells Metro. 'So, I started to think, what can I do? How can I fight?' His persistence led to a legal case against YouTube in March, with a European out-of-court settlement body ruling that the platform failed to provide sufficient evidence for the ban. 'It proved to me that I didn't do anything wrong,' he says. 'I didn't violate any policy and it showed me the termination process was wrong.'

A Widespread Issue Affecting Diverse Creators

Oleksandr's experience is not isolated. Dozens of YouTubers, including amateur animators, Roblox gamers, and true crime enthusiasts, have reported similar issues, alleging that YouTube's AI systems are mistakenly flagging their content as 'scam/spam' or for obscure rule violations. Emails reviewed by Metro reveal that appeals are often rejected within minutes, even for channels with hundreds of videos, locking creators out of substantial earnings through the YouTube Partner Program.

Niall, a 16-year-old student from Ireland, saw his account with 500,000 subscribers terminated for spam in October, despite earning nearly £9,000. 'I think it's bizarre that 300,000,000 people can enjoy your content but AI moderation and a time-crunched support worker can destroy it,' he says. After unsuccessful attempts to resolve the issue via YouTube's live chat, his parents intervened, only to receive a rejection in under 20 seconds.

Similarly, Josh lost his channel with 650,000 subscribers in November after it was listed as 'spam/scam', with £590 of his earnings withheld. 'I submitted my appeal at around 8.10am and it was rejected in three minutes,' he recounts, noting the gutting impact on his solo animation work.

Campaigning for Human Review and Accountability

In response, many creators are campaigning on social media, tagging TeamYouTube in hopes of gaining human attention. Shaz, a 27-year-old YouTuber, has compiled a database of 260 accounts terminated since last January, with only 37 reinstated. 'It appears as though the AI is occasionally mistaking human content with qualities of automated spam,' he says, emphasising that he verifies each incident. 'I personally believe this story will be seen as one of YouTube's darkest moments in its history.'

Among the restored channels is The Dark Archive, run by Alaa, a 25-year-old from Egypt. His channel, which aimed to fund his brother's medical bills, was terminated for 'misleading viewers' before YouTube reversed its decision after he campaigned on X. 'When it's a small creator with no audience? They don't care at all,' he remarks, underscoring the disparity in treatment.

YouTube's Response and the Broader Context

YouTube acknowledged these concerns in a blog post last November, noting that nearly 5,000,000 channels were removed between January and June, mostly for spam/scam. The platform stated that most bans were upheld after review, citing misleading or shocking content designed to farm engagement, while others violated circumvention policies. However, appeal times vary, and verdicts are not always clear, as reviewers rely on flagged content.

In a statement to Metro, YouTube said: 'We have used a combination of humans and AI to handle the scale and complexity of YouTube for many years. We investigated thoroughly and confirmed that our Community Guidelines enforcement systems are working normally.' TeamYouTube attributed recent increases to a rise in financial scams from Southeast Asia, but this offers little solace to affected creators like Oleksandr, who feel trapped by YouTube's dominance. 'After this horrible experience, I'd happily forget about YouTube,' he says, 'but I have nowhere else to go.'