South Korea's Pioneering AI Legislation Sparks Debate Amid Global Tech Ambitions

South Korea has implemented what it describes as the world's first comprehensive artificial intelligence regulations, positioning itself at the forefront of global AI governance while simultaneously pursuing ambitions to become a leading technological power. The new legislation, which took effect recently, represents a significant milestone in the international effort to regulate rapidly advancing AI technologies that have outpaced traditional legal frameworks.

Balancing Innovation with Regulation

The AI Basic Act introduces several groundbreaking requirements for companies operating in South Korea's technology sector. Under the new regulations, providers of AI services must implement invisible digital watermarks for clearly artificial outputs such as cartoons and artwork, while realistic deepfakes require visible labelling. The legislation creates a special category for "high-impact AI" systems used in critical areas including medical diagnosis, hiring processes, and loan approvals, mandating that operators conduct thorough risk assessments and document their decision-making processes.

Government officials maintain that the legislation is primarily focused on promoting industry growth rather than restricting innovation, with estimates suggesting 80-90% of the law's provisions aim to support technological advancement. However, this balanced approach has encountered significant resistance from multiple quarters of South Korean society.

Startup Concerns and Compliance Challenges

Local technology startups have expressed substantial concerns about the regulatory burden imposed by the new legislation. A December survey conducted by the Startup Alliance revealed that an overwhelming 98% of AI startups were unprepared for compliance with the new requirements. Lim Jung-wook, co-head of the alliance, articulated widespread frustration within the startup community, questioning why South Korean companies must pioneer such comprehensive regulation.

The legislation requires companies to self-determine whether their systems qualify as high-impact AI, a process that critics describe as lengthy and uncertain. Furthermore, the regulatory framework creates potential competitive imbalances, as all domestic companies face regulation regardless of size, while only foreign firms meeting specific thresholds – such as industry giants Google and OpenAI – must comply with the same requirements.

Civil Society Criticisms and Protection Gaps

While startups argue the regulations go too far, civil society organisations contend they don't go far enough in protecting citizens from AI-related harms. Four prominent organisations, including Minbyun – a collective of human rights lawyers – issued a joint statement arguing the law contains almost no provisions to protect individuals from AI risks. The groups noted that while the legislation stipulates protection for "users," this term primarily refers to institutions like hospitals and financial companies rather than individuals affected by AI systems.

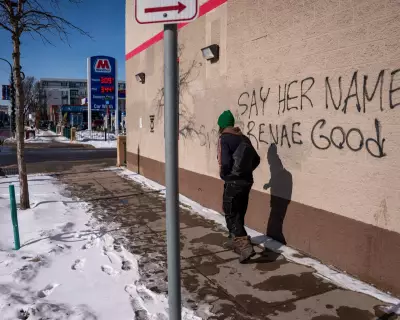

The regulatory context is particularly significant given South Korea's troubling statistics regarding AI misuse. According to a 2023 report by Security Hero, a US-based identity protection firm, South Korea accounts for 53% of all global deepfake pornography victims. This alarming statistic gained renewed relevance following investigations in August 2024 that exposed massive networks of Telegram chatrooms creating and distributing AI-generated sexual imagery of women and girls.

A Distinct Regulatory Approach

Experts note that South Korea has deliberately chosen a different regulatory path from other major jurisdictions. Unlike the European Union's strict risk-based model, the United States and United Kingdom's largely sector-specific approaches, or China's combination of state-led industrial policy and detailed service-specific regulation, South Korea has opted for a more flexible, principles-based framework.

Melissa Hyesun Yoon, a law professor at Hanyang University specialising in AI governance, describes this approach as "trust-based promotion and regulation." She suggests that South Korea's framework will serve as a valuable reference point in global AI governance discussions, offering a middle ground between restrictive regulation and laissez-faire approaches.

Implementation and Future Adjustments

Companies that violate the new regulations face potential fines of up to 30 million won (approximately £15,000), though the government has promised a grace period of at least one year before penalties are imposed. The legislation also establishes requirements for safety reports for extremely powerful AI models, though the threshold is set so high that government officials acknowledge no models worldwide currently meet it.

In a statement, the Ministry of Science and ICT expressed confidence that the law would "remove legal uncertainty" and build "a healthy and safe domestic AI ecosystem," while acknowledging that further clarification would be provided through revised guidelines. The country's human rights commission has criticised the enforcement decree for lacking clear definitions of high-impact AI, noting that those most likely to suffer rights violations remain in regulatory blind spots.

As South Korea navigates this pioneering regulatory landscape, the world watches closely to see whether this ambitious attempt to balance innovation with protection will succeed in creating a sustainable framework for AI development while addressing legitimate concerns from both industry and civil society.