The AI Dilemma: Innovation Versus Caution in Europe

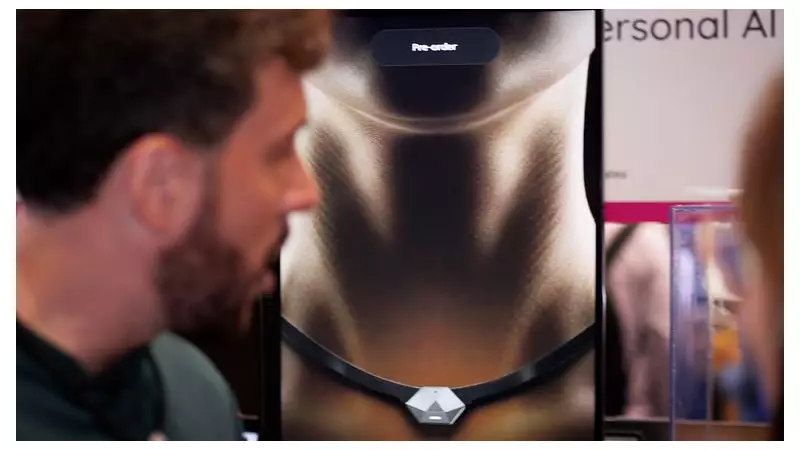

The global tech elite descended upon Lisbon for the sprawling Web Summit conference, where a new buzzword dominated conversations: agentic AI. From AI agents integrated into jewellery to software designed for seamless workflow integration, the technology was showcased across more than twenty panel discussions.

This phenomenon has even penetrated mainstream culture, with the Daily Mail recently listing 'agentic' as an 'in' word for Generation Z. But what exactly is it? Agentic AI refers to artificial intelligence capable of performing specific tasks autonomously, such as booking flights, ordering transport, or assisting customers without constant human oversight.

A Technology with Deep Roots

Despite its current trendiness, the concept is far from novel. Babak Hodjat, now Chief AI Officer at Cognizant, invented the natural language technology that powered one of the world's most famous AI agents, Siri, back in the 1990s.

"Back then, the fact that Siri itself was multi-agentic was a detail that we didn't even talk about - but it was," Mr Hodjat revealed to Sky News from the event. He traced the philosophical origins of such technology even further back, noting, "Historically, the first person that talked about something like an agent was Alan Turing."

However, this advanced capability introduces heightened risks. Unlike general-purpose AI, agentic systems interact with and modify real-world scenarios directly, magnifying concerns like data bias and unforeseen consequences.

The Magnified Risks of Autonomous AI

The IBM Responsible Technology Board emphasised these new challenges in their 2025 report. They highlighted a specific emerging risk: an AI agent might modify a dataset in a way that introduces bias. This action could be irreversible if the scaled bias goes undetected, potentially impacting real-world decisions and systems.

Yet, for Mr Hodjat, the primary concern isn't the agents themselves, but human behaviour. "People are over-trusting [AI] and taking their responses on face value without digging in and making sure that it's not just some hallucination that's coming up," he cautioned.

He stressed that it is "incumbent upon all of us to learn what the boundaries are" and to educate both ourselves and future generations on where to place trust in these systems.

Is European Prudence a Strategic Error?

This call for careful handling resonates strongly in Europe, where a more wary approach to AI prevails compared to the United States. This year, the EU AI Act came into force, establishing one of the world's first comprehensive regulatory frameworks for artificial intelligence.

But is this caution coming at a cost? Jarek Kutylowski, CEO of the German AI translation giant DeepL, believes so. When questioned on whether innovation should slow for stricter regulations, he acknowledged the dilemma but argued that Europe is taking it too far.

"Looking at the apparent risks is easy," Mr Kutylowski stated. "Looking at the risks like what are we going to miss out on if we don't have the technology... that is probably the bigger risk."

He expressed grave concern about "Europe being left behind in the AI race," warning that the economic consequences will not be immediately visible. "You won't see it until we start falling behind and until our economies cannot capitalise on those productivity gains that maybe other parts of the world will see."

His conclusion was a pragmatic one: technological progress is unstoppable, and the critical question for Europe is how to pragmatically embrace what lies ahead without crippling its competitive edge.