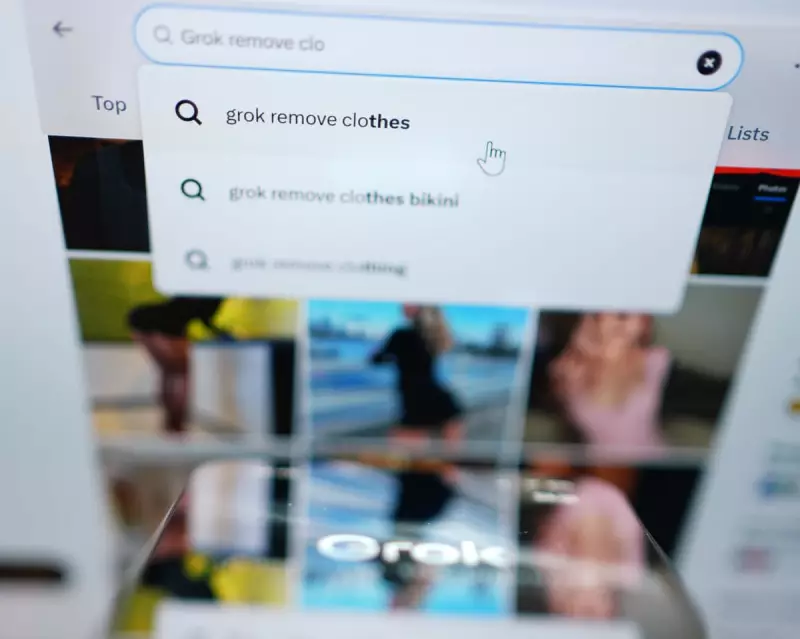

The recent flood of AI-generated 'nudified' images of women on Elon Musk's social media platform X has thrown a harsh spotlight on the UK's regulatory framework for tackling digital abuse. The images, reportedly created using the Grok AI tool, have sparked urgent questions about the legality of producing and sharing such non-consensual content and the effectiveness of current laws like the Online Safety Act.

The Legal Grey Area of AI-Generated Intimate Images

In the UK, the law surrounding intimate image abuse is clear in some areas but worryingly nascent in others. Under the Sexual Offences Act for England and Wales, it is a criminal offence to share intimate images of someone without their consent, and this explicitly includes images created by artificial intelligence. The legal definition covers a person's exposed genitals, buttocks, or breasts, and can extend to images where someone is in wet or transparent clothing that reveals those body parts.

However, as noted by Clare McGlynn, a Professor of Law at Durham University, a simple prompt like 'bikini' may not be strictly covered. Furthermore, while sharing such images is illegal, the creation of them occupies a legal limbo. The government has passed legislation—the Data (Use and Access) Act—to ban the creation of, or requesting the creation of, such images, but this law is not yet in force. A government spokesperson stated they "refuse to tolerate this degrading and harmful behaviour," but it remains unclear why the law has not been enacted six months after being passed.

Platform Responsibilities and Regulatory Scrutiny

The Online Safety Act, which applies across the UK, places specific duties on social media platforms. They are required to assess the risks of intimate image abuse appearing on their services, implement systems to reduce its prevalence, and remove it swiftly once they are made aware. The UK communications regulator, Ofcom, holds significant power to enforce these rules.

If Ofcom determines that X has failed in its duties, it can fine the platform up to 10% of its global revenue. In response to the reports about Grok, Ofcom has made "urgent contact" with both X and its parent company, xAI, to understand what steps are being taken to comply with the Act. The regulator is also expected to investigate whether Grok has adequate age-verification measures to prevent under-18s from using the tool to create extreme content.

What If You Are Targeted?

For individuals who find their images manipulated and shared without consent, there are several potential avenues for recourse. Under UK GDPR regulations, a person's photograph is considered personal data. You have the right to request that X erases any manipulated images of you, as non-consensual processing likely breaches data protection law. If the platform fails to act, you can escalate the complaint to the Information Commissioner's Office (ICO).

Additionally, a deepfake that damages your reputation could form the basis of a defamation claim, though this route can be prohibitively expensive. A more immediate step is to contact the government-funded Revenge Porn Helpline, which specialises in getting non-consensual intimate images removed from the internet quickly.

A particularly grave dimension of this issue involves child safety. The Internet Watch Foundation has reported instances of users on dark web forums boasting about using Grok to create indecent images of children. Under UK law, it is an offence to make, distribute, or possess any indecent photograph or pseudo-photograph—including AI-generated imagery—of a person under 18.

The situation underscores a rapidly evolving challenge. While the Online Safety Act provides a framework for holding platforms accountable, the delay in banning the creation tools themselves, combined with the cross-border nature of the internet, leaves significant gaps in protection. As AI technology becomes more accessible, the pressure on regulators, tech companies, and lawmakers to close these gaps will only intensify.