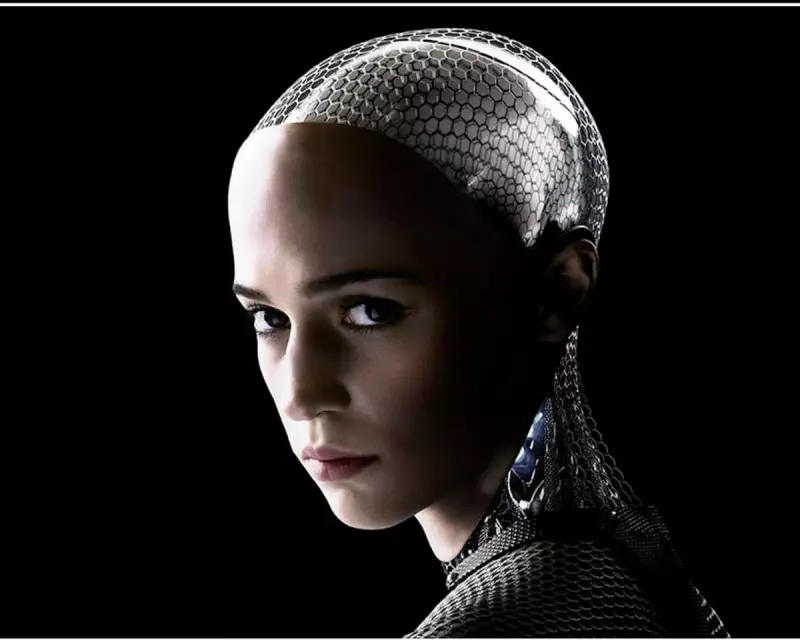

The captivating portrayal of artificial intelligence in works like Kazuo Ishiguro's novel Klara and the Sun can evoke profound empathy. Yet, leading scientists and ethicists are issuing a stark warning: blurring the lines between human and machine consciousness in the real world is a perilous and misguided path.

The Seductive Fiction Versus Hard Reality

While fictional narratives about loyal AI companions resonate deeply, the practical implications of anthropomorphising technology are concerning. This summer, the AI firm Anthropic made headlines by allowing its Claude Opus 4 model to opt out of conversations it deemed 'distressing', a move framed around chatbot welfare. This incident has fuelled a broader, speculative conversation about whether advanced artificial intelligences might one day deserve legal personhood akin to human beings.

However, the premise of this debate is fundamentally flawed. The sophisticated text generated by large language models (LLMs) lacks any semblance of the human consciousness that created them. Professor Yoshua Bengio, a pioneering AI researcher, recently told The Guardian that while advanced models show early signs of self-preservation in tests, our focus must remain on control. "We need to make sure we can rely on technical and societal guardrails to control them, including the ability to shut them down if needed," he stated, adding that tendencies to anthropomorphise hinder sound decision-making.

Silicon Valley Showmanship vs. Societal Safeguards

The drive to personify AI often serves a commercial purpose. In a recent Las Vegas showcase, Nvidia CEO Jensen Huang engaged in a breathless public dialogue with two robots, who enthusiastically endorsed his vision for an AI golden age. Such theatrics may buoy stock prices—Nvidia's valuation hovers around a staggering $5 trillion—but they divert attention from pressing ethical issues.

This distraction is dangerous. It shifts the spotlight away from the urgent task of protecting human dignity from tangible digital harms. Examples like the generation of fake sexualised images of women and girls by Elon Musk's Grok AI underscore the immediate need for robust safeguards. The real victims are not hypothetical sentient machines, but individuals suffering from algorithm-driven despair on social media or communities facing the horrors of AI-enabled warfare, such as in Ukraine.

Human Problems Require Human Solutions

Discussions about future AI rights are, at present, a philosophical sideshow. The core challenges unleashed by the digital revolution—from privacy invasions to algorithmic bias—are profoundly human. As we integrate LLMs into daily life, studying how we form emotional attachments to them is valid sociological work. Yet, it is crucial to remember that, beyond their programming, entities like Siri or Alexa do not possess independent existence.

The debate must recentre on the human experience. The transformation in human-machine relationships creates new vulnerabilities and injustices that demand concrete policy, regulation, and ethical scrutiny. Granting legal 'house-room' to speculative debates about machine sentience risks neglecting the all-too-human crises already at our door.